Assume you want to write a code to calculate the multiplicat

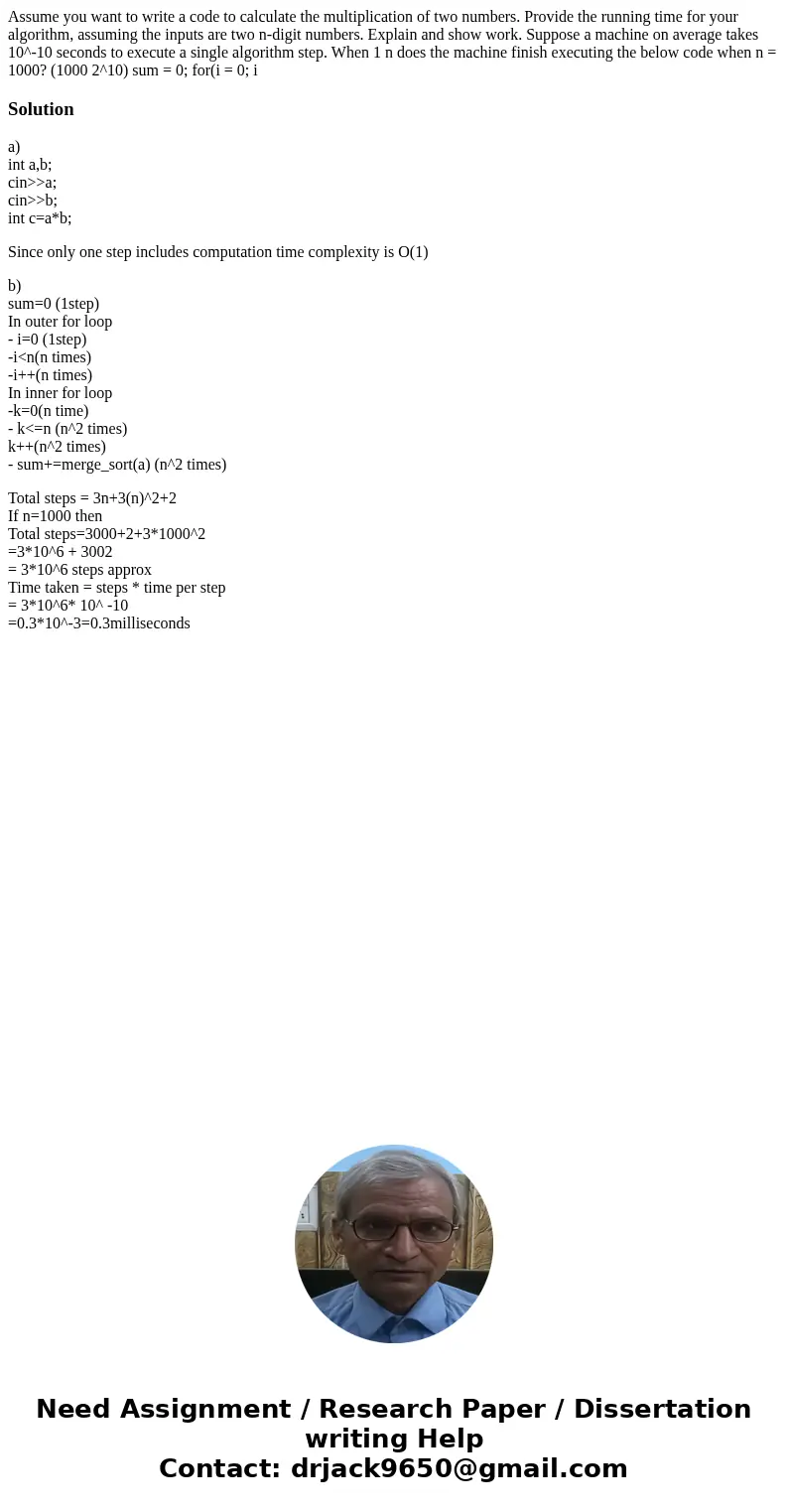

Assume you want to write a code to calculate the multiplication of two numbers. Provide the running time for your algorithm, assuming the inputs are two n-digit numbers. Explain and show work. Suppose a machine on average takes 10^-10 seconds to execute a single algorithm step. When 1 n does the machine finish executing the below code when n = 1000? (1000 2^10) sum = 0; for(i = 0; i

Solution

a)

int a,b;

cin>>a;

cin>>b;

int c=a*b;

Since only one step includes computation time complexity is O(1)

b)

sum=0 (1step)

In outer for loop

- i=0 (1step)

-i<n(n times)

-i++(n times)

In inner for loop

-k=0(n time)

- k<=n (n^2 times)

k++(n^2 times)

- sum+=merge_sort(a) (n^2 times)

Total steps = 3n+3(n)^2+2

If n=1000 then

Total steps=3000+2+3*1000^2

=3*10^6 + 3002

= 3*10^6 steps approx

Time taken = steps * time per step

= 3*10^6* 10^ -10

=0.3*10^-3=0.3milliseconds

Homework Sourse

Homework Sourse